It sounds like a scenario straight out of a Ridley Scott film: technology that not only sounds more “real” than actual humans, but looks more convincing, too. Yet it seems that moment has already arrived.

A new study has found people are more likely to think pictures of white faces generated by AI are human than photographs of real individuals.

“Remarkably, white AI faces can convincingly pass as more real than human faces — and people do not realize they are being fooled,” the researchers report.

Photo: Reuters

The team, which includes researchers from Australia, the UK and the Netherlands, said their findings had important implications in the real world, including in identity theft, with the possibility that people could end up being duped by digital impostors.

However, the team said the results did not hold for images of people of color, possibly because the algorithm used to generate AI faces was largely trained on images of white people.

Zak Witkower, a co-author of the research from the University of Amsterdam, said that could have ramifications for areas ranging from online therapy to robots.

“It’s going to produce more realistic situations for white faces than other race faces,” he said.

The team caution such a situation could also mean perceptions of race end up being confounded with perceptions of being “human,” adding it could also perpetuate social biases, including in finding missing children, given this can depend on AI-generated faces.

Writing in the journal Psychological Science, the team describe how they carried out two experiments. In one, white adults were each shown half of a selection of 100 AI white faces and 100 human white faces. The team chose this approach to avoid potential biases in how own-race faces are recognized compared with other-race faces.

The participants were asked to select whether each face was AI-generated or real, and how confident they were on a 100-point scale.

The results from 124 participants reveal that 66 percent of AI images were rated as human compared with 51 percent of real images.

The team said re-analysis of data from a previous study had found people were more likely to rate white AI faces as human than real white faces. However, this was not the case for people of color, where about 51 people of both AI and real faces were judged as human. The team added that they did not find the results were affected by the participants’ race.

In a second experiment, participants were asked to rate AI and human faces on 14 attributes, such as age and symmetry, without being told some images were AI-generated.

The team’s analysis of results from 610 participants suggested the main factors that led people to erroneously believe AI faces were human included greater proportionality in the face, greater familiarity and less memorability.

Somewhat ironically, while humans seem unable to tell apart real faces from those generated by AI, the team developed a machine learning system that can do so with 94 percent accuracy.

Clare Sutherland, co-author of the study from the University of Aberdeen, said the study highlighted the importance of tackling biases in AI.

“As the world changes extremely rapidly with the introduction of AI, it’s critical that we make sure that no one is left behind or disadvantaged in any situation — whether due to ethnicity, gender, age, or any other protected characteristic,” she said.

In 2020, a labor attache from the Philippines in Taipei sent a letter to the Ministry of Foreign Affairs demanding that a Filipina worker accused of “cyber-libel” against then-president Rodrigo Duterte be deported. A press release from the Philippines office from the attache accused the woman of “using several social media accounts” to “discredit and malign the President and destabilize the government.” The attache also claimed that the woman had broken Taiwan’s laws. The government responded that she had broken no laws, and that all foreign workers were treated the same as Taiwan citizens and that “their rights are protected,

A white horse stark against a black beach. A family pushes a car through floodwaters in Chiayi County. People play on a beach in Pingtung County, as a nuclear power plant looms in the background. These are just some of the powerful images on display as part of Shen Chao-liang’s (沈昭良) Drifting (Overture) exhibition, currently on display at AKI Gallery in Taipei. For the first time in Shen’s decorated career, his photography seeks to speak to broader, multi-layered issues within the fabric of Taiwanese society. The photographs look towards history, national identity, ecological changes and more to create a collection of images

The recent decline in average room rates is undoubtedly bad news for Taiwan’s hoteliers and homestay operators, but this downturn shouldn’t come as a surprise to anyone. According to statistics published by the Tourism Administration (TA) on March 3, the average cost of a one-night stay in a hotel last year was NT$2,960, down 1.17 percent compared to 2023. (At more than three quarters of Taiwan’s hotels, the average room rate is even lower, because high-end properties charging NT$10,000-plus skew the data.) Homestay guests paid an average of NT$2,405, a 4.15-percent drop year on year. The countrywide hotel occupancy rate fell from

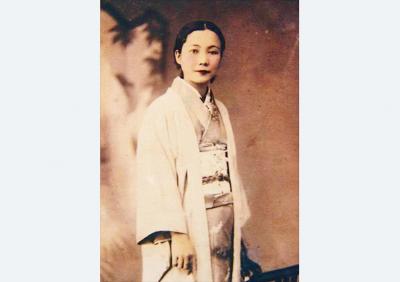

March 16 to March 22 In just a year, Liu Ching-hsiang (劉清香) went from Taiwanese opera performer to arguably Taiwan’s first pop superstar, pumping out hits that captivated the Japanese colony under the moniker Chun-chun (純純). Last week’s Taiwan in Time explored how the Hoklo (commonly known as Taiwanese) theme song for the Chinese silent movie The Peach Girl (桃花泣血記) unexpectedly became the first smash hit after the film’s Taipei premiere in March 1932, in part due to aggressive promotion on the streets. Seeing an opportunity, Columbia Records’ (affiliated with the US entity) Taiwan director Shojiro Kashino asked Liu, who had