Web sites spreading misinformation about health attracted nearly half a billion views on Facebook in April alone, while the COVID-19 pandemic was escalating worldwide, a report found.

Facebook had promised to crack down on conspiracy theories and inaccurate news early in the pandemic, but as its executives promised accountability, its algorithm appears to have fueled traffic to a network of sites sharing dangerous false news, campaign group Avaaz said.

False medical information can be deadly. Researchers at the London School of Hygiene and Tropical Medicine have directly linked a single piece of false information to 800 COVID-19 deaths.

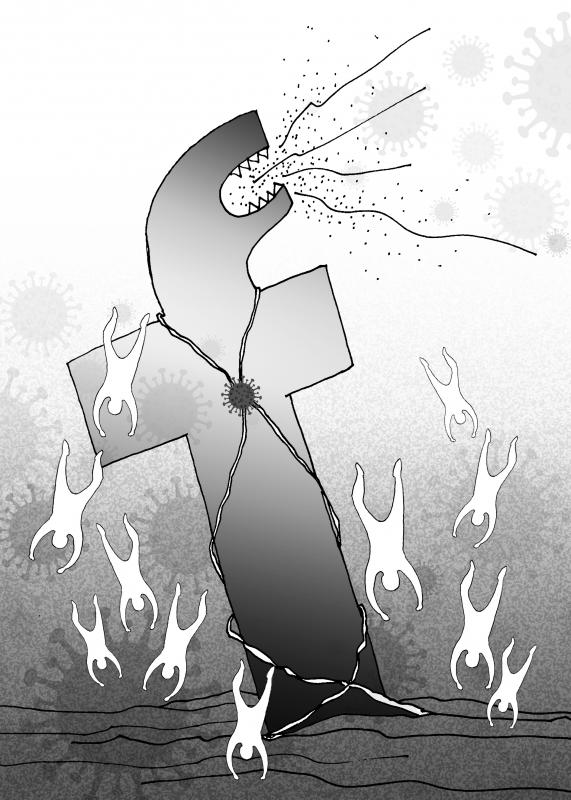

Illustration: Tania Chou

Pages from the top 10 sites peddling inaccurate information and conspiracy theories about health received almost four times as many views on Facebook as the top 10 reputable sites for health information, it said.

The Avaaz report focused on Facebook pages and Web sites that shared large numbers of false claims about the virus. The pages and sites covered a variety of different backgrounds, including alternative medicine, organic farming, far-right politics and generalized conspiracies.

It found that global networks of 82 sites spreading health misinformation over at least five countries had generated an estimated 3.8 billion views on Facebook in the past 12 months. Their audience peaked in April, with 460 million views in a single month.

“This suggests that just when citizens needed credible health information the most and while Facebook was trying to proactively raise the profile of authoritative health institutions on the platform, its algorithm was potentially undermining these efforts,” the report said.

A relatively small, but influential network is responsible for driving huge amounts of traffic to health misinformation sites. Avaaz identified 42 “super-spreader” sites that had 28 million followers generating an estimated 800 million views.

A single article, which falsely claimed that the American Medical Association was encouraging doctors and hospitals to overestimate deaths from COVID-19, was seen 160 million times.

This vast collective reach suggested that Facebook’s internal systems are not capable of protecting users from misinformation about health, even at a critical time when the company has promised to keep users “safe and informed.”

“Avaaz’s latest research is yet another damning indictment of Facebook’s capacity to amplify false or misleading health information during the pandemic,” said UK Member of Parliament Damian Collins, who led a British parliament investigation into disinformation.

“The majority of this dangerous content is still on Facebook with no warning or context whatsoever,” Collins said. “The time for [Facebook chief executive Mark] Zuckerberg to act is now. He must clean up his platform and help stop this harmful infodemic.”

According to a research paper published in The American Journal of Tropical Medicine and Hygiene, the potential harm of health misinformation is vast. Scanning media and social media reports from 87 countries, researchers identified more than 2,000 claims about COVID-19 that were widely circulating, of which more than 1,800 were provably false.

Some of the false claims were directly harmful: One, suggesting that pure alcohol could kill the virus, has been linked to 800 deaths, as well as 60 people going blind after drinking methanol as a cure.

“In India, 12 people, including five children, became sick after drinking liquor made from toxic seed Datura — ummetta plant in local parlance — as a cure to coronavirus disease,” the paper said. “The victims reportedly watched a video on social media that Datura seeds give immunity against COVID-19.”

Beyond the specifically dangerous falsehoods, much misinformation is merely useless, but can contribute to the spread of COVID-19, as with one South Korean church that came to believe spraying salt water could combat the virus.

“They put the nozzle of the spray bottle inside the mouth of a follower who was later confirmed as a patient before they did likewise for other followers as well, without disinfecting the sprayer,” a South Korean official said.

More than 100 followers were infected as a result.

“National and international agencies, including the fact-checking agencies, should not only identify rumors and conspiracies theories and debunk them, but should also engage social media companies to spread correct information,” the researchers said.

Among Facebook’s tactics for fighting misinformation on the platform has been giving independent fact-checkers the ability to put warning labels on items they consider untrue.

Zuckerberg said that fake news would be marginalized by the social network’s algorithm, which determines what content viewers see.

“Posts that are rated as false are demoted and lose on average 80 percent of their future views,” he wrote in 2018.

However, Avaaz found that huge amounts of misinformation slips through Facebook’s verification system, despite having been flagged by fact-checking organizations.

Avaaz analyzed nearly 200 pieces of health misinformation that were shared on the site after being identified as problematic. Fewer than one in five carried a warning label, with the vast majority — 84 percent — slipping through controls after they were translated into other languages, or republished in whole or part.

“These findings point to a gap in Facebook’s ability to detect clones and variations of fact-checked content — especially across multiple languages — and to apply warning labels to them,” the report said.

Two simple steps could hugely reduce the reach of misinformation. The first would be proactively correcting misinformation that was seen before it was labeled as false, by putting prominent corrections in Facebook users’ feeds. Research has found corrections like these can halve belief in incorrect reporting, Avaaz said.

The other step would be to improve the detection and monitoring of translated and cloned material, so that Zuckerberg’s promise to starve the sites of their audiences is actually made good.

A Facebook spokesperson said: “We share Avaaz’s goal of limiting misinformation, but their findings don’t reflect the steps we’ve taken to keep it from spreading on our services. Thanks to our global network of fact-checkers, from April to June, we applied warning labels to 98 million pieces of COVID-19 misinformation and removed 7 million pieces of content that could lead to imminent harm.”

“We’ve directed over 2 billion people to resources from health authorities, and when someone tries to share a link about COVID-19, we show them a pop-up to connect them with credible health information,” they said.

The Chinese government on March 29 sent shock waves through the Tibetan Buddhist community by announcing the untimely death of one of its most revered spiritual figures, Hungkar Dorje Rinpoche. His sudden passing in Vietnam raised widespread suspicion and concern among his followers, who demanded an investigation. International human rights organization Human Rights Watch joined their call and urged a thorough investigation into his death, highlighting the potential involvement of the Chinese government. At just 56 years old, Rinpoche was influential not only as a spiritual leader, but also for his steadfast efforts to preserve and promote Tibetan identity and cultural

The gutting of Voice of America (VOA) and Radio Free Asia (RFA) by US President Donald Trump’s administration poses a serious threat to the global voice of freedom, particularly for those living under authoritarian regimes such as China. The US — hailed as the model of liberal democracy — has the moral responsibility to uphold the values it champions. In undermining these institutions, the US risks diminishing its “soft power,” a pivotal pillar of its global influence. VOA Tibetan and RFA Tibetan played an enormous role in promoting the strong image of the US in and outside Tibet. On VOA Tibetan,

Former minister of culture Lung Ying-tai (龍應台) has long wielded influence through the power of words. Her articles once served as a moral compass for a society in transition. However, as her April 1 guest article in the New York Times, “The Clock Is Ticking for Taiwan,” makes all too clear, even celebrated prose can mislead when romanticism clouds political judgement. Lung crafts a narrative that is less an analysis of Taiwan’s geopolitical reality than an exercise in wistful nostalgia. As political scientists and international relations academics, we believe it is crucial to correct the misconceptions embedded in her article,

Sung Chien-liang (宋建樑), the leader of the Chinese Nationalist Party’s (KMT) efforts to recall Democratic Progressive Party (DPP) Legislator Lee Kun-cheng (李坤城), caused a national outrage and drew diplomatic condemnation on Tuesday after he arrived at the New Taipei City District Prosecutors’ Office dressed in a Nazi uniform. Sung performed a Nazi salute and carried a copy of Adolf Hitler’s Mein Kampf as he arrived to be questioned over allegations of signature forgery in the recall petition. The KMT’s response to the incident has shown a striking lack of contrition and decency. Rather than apologizing and distancing itself from Sung’s actions,