Experts from around the world have called for greater regulation of artificial intelligence (AI) to prevent it from escaping human control, as global leaders gather in Paris for a summit on the technology.

France, cohosting a two-day gathering with India, has chosen to spotlight AI action this year rather than putting the safety concerns front and center as at the previous meetings in Britain’s Bletchley Park in 2023 and the South Korean capital Seoul last year.

The French vision is for governments, businesses and other actors to come out in favor of global governance for AI and make commitments on sustainability, without setting binding rules.

Photo: AFP

“We don’t want to spend our time talking only about the risks. There’s the very real opportunity aspect as well,” said Anne Bouverot, AI envoy for French President Emmanuel Macron.

Max Tegmark, head of the US-based Future of Life Institute that has regularly warned of AI’s dangers, said that France should not miss the opportunity to act.

“France has been a wonderful champion of international collaboration and has the opportunity to really lead the rest of the world,” Tegmark said. “There is a big fork in the road here at the Paris summit and it should be embraced.”

Tegmark’s institute has backed yesterday’s launch of a platform dubbed Global Risk and AI Safety Preparedness (GRASP) that aims to map major risks linked to AI and solutions being developed around the world.

“We’ve identified around 300 tools and technologies in answer to these risks,” GRASP coordinator Cyrus Hodes said.

Results from the survey would be passed to the Organisation for Economic Co-operation and Development (OECD) rich-countries club and members of the Global Partnership on Artificial Intelligence — a grouping of almost 30 nations including major European economies, Japan, South Korea and the US that were to meet in Paris yesterday.

The past week also saw the presentation of the first International AI Safety Report on Thursday, compiled by 96 experts and backed by 30 countries, the UN, EU and OECD.

Risks outlined in the document range from the familiar, such as fake content online, to the far more alarming.

“Proof is steadily appearing of additional risks like biological attacks or cyberattacks,” the report’s coordinator and computer scientist Yoshua Bengio said.

In the longer term, 2018 Turing Prize winner Bengio fears a possible “loss of control” by humans over AI systems, potentially motivated by “their own will to survive.”

“A lot of people thought that mastering language at the level of ChatGPT-4 was science fiction as recently as six years ago, and then it happened,” Tegmark said.

“The big problem now is that a lot of people in power still have not understood that we’re closer to building artificial general intelligence (AGI) than to figuring out how to control it,” he said.

AGI refers to an AI that would equal or better humans in all fields.

“At worst, these American or Chinese companies lose control over this, and then after that Earth will be run by machines,” Tegmark said.

University of California, Berkeley computer science professor Stuart Russell said one of his greatest fears is “weapons systems where the AI that is controlling that weapon system is deciding who to attack, when to attack, and so on.”

Russell, who is also coordinator of the International Association for Safe and Ethical AI, places the responsibility firmly on governments to set up safeguards against armed AIs.

The solution is very simple: Treating the AI industry the same way all other industries are, Tegmark said.

“Before somebody can build a new nuclear reactor outside of Paris they have to demonstrate to government-appointed experts that this reactor is safe. That you’re not going to lose control over it... It should be the same for AI,” he said.

Hon Hai Precision Industry Co (鴻海精密) yesterday said that its research institute has launched its first advanced artificial intelligence (AI) large language model (LLM) using traditional Chinese, with technology assistance from Nvidia Corp. Hon Hai, also known as Foxconn Technology Group (富士康科技集團), said the LLM, FoxBrain, is expected to improve its data analysis capabilities for smart manufacturing, and electric vehicle and smart city development. An LLM is a type of AI trained on vast amounts of text data and uses deep learning techniques, particularly neural networks, to process and generate language. They are essential for building and improving AI-powered servers. Nvidia provided assistance

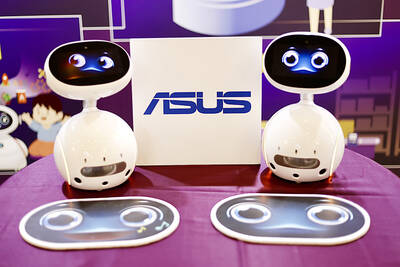

STILL HOPEFUL: Delayed payment of NT$5.35 billion from an Indian server client sent its earnings plunging last year, but the firm expects a gradual pickup ahead Asustek Computer Inc (華碩), the world’s No. 5 PC vendor, yesterday reported an 87 percent slump in net profit for last year, dragged by a massive overdue payment from an Indian cloud service provider. The Indian customer has delayed payment totaling NT$5.35 billion (US$162.7 million), Asustek chief financial officer Nick Wu (吳長榮) told an online earnings conference. Asustek shipped servers to India between April and June last year. The customer told Asustek that it is launching multiple fundraising projects and expected to repay the debt in the short term, Wu said. The Indian customer accounted for less than 10 percent to Asustek’s

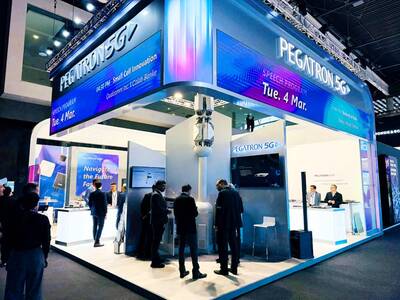

‘DECENT RESULTS’: The company said it is confident thanks to an improving world economy and uptakes in new wireless and AI technologies, despite US uncertainty Pegatron Corp (和碩) yesterday said it plans to build a new server manufacturing factory in the US this year to address US President Donald Trump’s new tariff policy. That would be the second server production base for Pegatron in addition to the existing facilities in Taoyuan, the iPhone assembler said. Servers are one of the new businesses Pegatron has explored in recent years to develop a more balanced product lineup. “We aim to provide our services from a location in the vicinity of our customers,” Pegatron president and chief executive officer Gary Cheng (鄭光治) told an online earnings conference yesterday. “We

LEAK SOURCE? There would be concern over the possibility of tech leaks if TSMC were to form a joint venture to operate Intel’s factories, an analyst said Taiwan Semiconductor Manufacturing Co (TSMC, 台積電) yesterday stayed mum after a report said that the chipmaker has pitched chip designers Nvidia Corp, Advanced Micro Devices Inc and Broadcom Inc about taking a stake in a joint venture to operate Intel Corp’s factories. Industry sources told the Central News Agency (CNA) that the possibility of TSMC proposing to operate Intel’s wafer fabs is low, as the Taiwanese chipmaker has always focused on its core business. There is also concern over possible technology leaks if TSMC were to form a joint venture to operate Intel’s factories, Concord Securities Co (康和證券) analyst Kerry Huang (黃志祺)