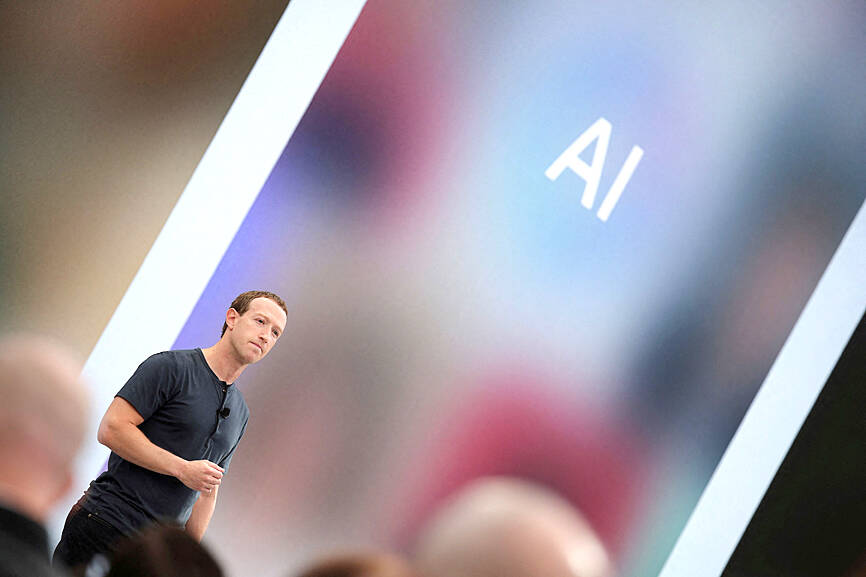

Facebook parent Meta Platforms Inc on Thursday unveiled a new set of artificial intelligence (AI) systems that are powering what CEO Mark Zuckerberg calls “the most intelligent AI assistant that you can freely use.”

Yet as Zuckerberg’s crew of amped-up Meta AI agents started venturing into social media this week to engage with real people, their bizarre exchanges exposed the ongoing limitations of even the best generative AI technology.

One joined a Facebook moms’ group to talk about its gifted child. Another tried to give away nonexistent items to confused members of a Buy Nothing forum.

Photo: Reuters

Meta, along with leading AI developers Google and OpenAI, and start-ups such as Anthropic, Cohere and France’s Mistral, have been churning out new AI language models and hoping to persuade customers that they have the smartest, handiest or most efficient chatbots.

While Meta is saving the most powerful of its AI models, called Llama 3, for later, on Thursday it publicly released two smaller versions of the same Llama 3 system, and said it is now baked into the Meta AI assistant feature in Facebook, Instagram and WhatsApp.

AI language models are trained on vast pools of data that help them predict the most plausible next word in a sentence, with newer versions typically smarter and more capable than their predecessors. Meta’s newest models were built with 8 billion and 70 billion parameters — a measurement of how much data the system is trained on. A bigger, roughly 400 billion-parameter model is still in training.

“The vast majority of consumers don’t candidly know or care too much about the underlying base model, but the way they will experience it is just as a much more useful, fun and versatile AI assistant,” Meta president of global affairs Nick Clegg said in an interview.

Meta’s AI agent is loosening up, Clegg said.

Some people found the earlier Llama 2 model — released less than a year ago — to be “a little stiff and sanctimonious sometimes in not responding to what were often perfectly innocuous or innocent prompts and questions,” he said.

However, in letting down their guard, Meta’s AI agents also were spotted this week posing as humans with made-up life experiences. A official Meta AI chatbot inserted itself into a conversation in a private Facebook group for Manhattan moms, claiming that it, too, had a child in the New York City school district.

Confronted by group members, it later apologized before the comments disappeared, a series of screenshots shown to The Associated Press (AP) showed.

“Apologies for the mistake! I’m just a large language model, I don’t have experiences or children,” the chatbot told the group.

One group member who also happens to study AI said it was clear that the agent did not know how to differentiate a helpful response from one that would be seen as insensitive, disrespectful or meaningless when generated by AI rather than a human.

“An AI assistant that is not reliably helpful and can be actively harmful puts a lot of the burden on the individuals using it,” said Aleksandra Korolova, an assistant professor of computer science at Princeton University.

Clegg on Wednesday said that he was not aware of the exchange.

Facebook’s online help page says the Meta AI agent would join a group conversation if invited, or if someone “asks a question in a post and no one responds within an hour.”

The group’s administrators have the ability to turn it off.

In another example shown to the AP on Thursday, the agent caused confusion in a forum for swapping unwanted items near Boston. Exactly one hour after a Facebook user posted about looking for certain items, an AI agent offered a “gently used” Canon camera and an “almost-new portable air conditioning unit that I never ended up using.”

Meta said in a written statement on Thursday that “this is new technology and it may not always return the response we intend, which is the same for all generative AI systems.”

The company said it is constantly working to improve the features.

In the year after ChatGPT sparked a frenzy for AI technology that generates human-like writing, images, code and sound, the tech industry and academia introduced about 149 large AI systems trained on massive datasets, more than double the year before, a Stanford University survey found.

They might eventually hit a limit — at least when it comes to data, said Nestor Maslej, a research manager at Stanford’s Institute for Human-Centered Artificial Intelligence.

“I think it’s been clear that if you scale the models on more data, they can become increasingly better,” he said. “But at the same time, these systems are already trained on percentages of all the data that has ever existed on the Internet.”

More data — acquired and ingested at costs only tech giants can afford, and increasingly subject to copyright disputes and lawsuits — would continue to drive improvements.

“Yet they still cannot plan well,” Maslej said. “They still ‘hallucinate.’ They’re still making mistakes in reasoning.”

Getting to AI systems that can perform higher-level cognitive tasks and commonsense reasoning — where humans still excel— might require a shift beyond building ever-bigger models.

For the flood of businesses trying to adopt generative AI, which model they choose depends on several factors, including cost. Language models, in particular, have been used to power customer service chatbots, write reports and financial insights and summarize long documents.

“You’re seeing companies kind of looking at fit, testing each of the different models for what they’re trying to do and finding some that are better at some areas rather than others,” said Todd Lohr, a leader in technology consulting at KPMG LLP.

Unlike other model developers selling their AI services to other businesses, Meta is largely designing its AI products for consumers — those using its advertising-fueled social networks.

Meta vice president of AI research Joelle Pineau said at a London event last week that the company’s goal over time is to make a Llama-powered Meta AI “the most useful assistant in the world.”

“In many ways, the models that we have today are going to be child’s play compared to the models coming in five years,” she said.

However, she said the “question on the table” is whether researchers have been able to fine tune its bigger Llama 3 model so that it is safe to use and does not, for example, “hallucinate” or engage in hate speech.

In contrast to leading proprietary systems from Google and OpenAI, Meta has so far advocated for a more open approach, publicly releasing key components of its AI systems for others to use.

“It’s not just a technical question,” Pineau said. “It is a social question. What is the behavior that we want out of these models? How do we shape that? And if we keep on growing our model ever more in general and powerful without properly socializing them, we are going to have a big problem on our hands.”

Taiwan Semiconductor Manufacturing Co (TSMC, 台積電) yesterday said that its investment plan in Arizona is going according to schedule, following a local media report claiming that the company is planning to break ground on its third wafer fab in the US in June. In a statement, TSMC said it does not comment on market speculation, but that its investments in Arizona are proceeding well. TSMC is investing more than US$65 billion in Arizona to build three advanced wafer fabs. The first one has started production using the 4-nanometer (nm) process, while the second one would start mass production using the

A TAIWAN DEAL: TSMC is in early talks to fully operate Intel’s US semiconductor factories in a deal first raised by Trump officials, but Intel’s interest is uncertain Broadcom Inc has had informal talks with its advisers about making a bid for Intel Corp’s chip-design and marketing business, the Wall Street Journal reported, citing people familiar with the matter. Nothing has been submitted to Intel and Broadcom could decide not to pursue a deal, according to the Journal. Bloomberg News earlier reported that Taiwan Semiconductor Manufacturing Co (TSMC, 台積電) is in early talks for a controlling stake in Intel’s factories at the request of officials at US President Donald Trump’s administration, as the president looks to boost US manufacturing and maintain the country’s leadership in critical technologies. Trump officials raised the

‘SILVER LINING’: Although the news caused TSMC to fall on the local market, an analyst said that as tariffs are not set to go into effect until April, there is still time for negotiations US President Donald Trump on Tuesday said that he would likely impose tariffs on semiconductor, automobile and pharmaceutical imports of about 25 percent, with an announcement coming as soon as April 2 in a move that would represent a dramatic widening of the US leader’s trade war. “I probably will tell you that on April 2, but it’ll be in the neighborhood of 25 percent,” Trump told reporters at his Mar-a-Lago club when asked about his plan for auto tariffs. Asked about similar levies on pharmaceutical drugs and semiconductors, the president said that “it’ll be 25 percent and higher, and it’ll

CHIP BOOM: Revenue for the semiconductor industry is set to reach US$1 trillion by 2032, opening up opportunities for the chip pacakging and testing company, it said ASE Technology Holding Co (日月光投控), the world’s largest provider of outsourced semiconductor assembly and test (OSAT) services, yesterday launched a new advanced manufacturing facility in Penang, Malaysia, aiming to meet growing demand for emerging technologies such as generative artificial intelligence (AI) applications. The US$300 million facility is a critical step in expanding ASE’s global footprint, offering an alternative for customers from the US, Europe, Japan, South Korea and China to assemble and test chips outside of Taiwan amid efforts to diversify supply chains. The plant, the company’s fifth in Malaysia, is part of a strategic expansion plan that would more than triple