Dirk Smeesters had spent several years of his career as a social psychologist at Erasmus University in Rotterdam studying how consumers behaved in different situations. Did color have an effect on what they bought? How did death-related stories in the media affect how people picked products? Was it better to use supermodels in cosmetics advertisements than average-looking women?

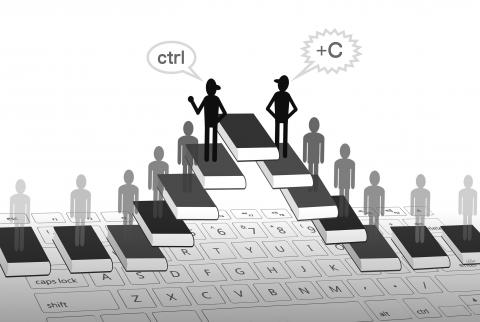

The questions are certainly intriguing, but unfortunately for anyone wanting truthful answers, some of Smeesters’ work turned out to be fraudulent. The psychologist, who admitted “massaging” the data in some of his papers, resigned from his position in June after being investigated by his university, which had been tipped off by Uri Simonsohn from the University of Pennsylvania in Philadelphia. Simonsohn carried out an independent analysis of the data and was suspicious of how perfect many of Smeesters’ results seemed when, statistically speaking, there should have been more variation in his measurements.

The case, which led to two scientific papers being retracted, came on the heels of an even bigger fraud, uncovered last year, perpetrated by the Dutch psychologist Diederik Stapel. He was found to have fabricated data for years and published it in at least 30 peer-reviewed papers, including a report in the journal Science about how untidy environments may encourage discrimination.

The cases have sent shockwaves through a discipline that was already facing serious questions about plagiarism.

“In many respects, psychology is at a crossroads — the decisions we take now will determine whether or not it remains a serious, credible, scientific discipline along with the harder sciences,” said Chris Chambers, a psychologist at Cardiff University. “We have to be open about the problems that exist in psychology and understand that, though they’re not unique to psychology, that doesn’t mean we shouldn’t be addressing them. If we do that, we can end up leading the other sciences rather than following them.”

Cases of scientific misconduct tend to hit the headlines precisely because scientists are supposed to occupy a moral high ground when it comes to the search for truth about nature. The scientific method developed as a way to weed out human bias. However, scientists, like anyone else, can be prone to bias in their bid for a place in the history books.

Increasing competition for shrinking government budgets for research and the disproportionately large rewards for publishing in the best journals have exacerbated the temptation to fudge results or ignore inconvenient data.

Massaged results can send other researchers down the wrong track, wasting time and money trying to replicate them. Worse, in medicine, it can delay the development of life-saving treatments or prolong the use of therapies that are ineffective or dangerous. Malpractice comes to light rarely, perhaps because scientific fraud is often easy to perpetrate, but hard to uncover.

The field of psychology has come under particular scrutiny because many results in the scientific literature defy replication by other researchers. Critics say it is too easy to publish psychology papers which rely on sample sizes that are too small, for example, or to publish only those results that support a favored hypothesis. Outright fraud is almost certainly just a small part of that problem, but high-profile examples have exposed a grayer area of bad or lazy scientific practice that many had preferred to brush under the carpet.

Many scientists, aided by software and statistical techniques to catch cheats, are now speaking up, calling on colleagues to put their houses in order.

Those who document misconduct in scientific research talk of a spectrum of bad practices. At the sharp end are plagiarism, fabrication and falsification of research. At the other end are questionable practices such as adding an author’s name to a paper when they have not contributed to the work, sloppiness in methods or not disclosing conflicts of interest.

“Outright fraud is somewhat impossible to estimate, because if you’re really good at it you wouldn’t be detectable,” Simonsohn said. “It’s like asking how much of our money is fake money — we only catch the really bad fakers, the good fakers we never catch.”

If things go wrong, the responsibility to investigate and punish misconduct rests with the scientists’ employers, the academic institution. However, these organizations face something of a conflict of interest.

“Some of the big institutions ... were really in denial and wanted to say that it didn’t happen under their roof,” said Liz Wager of the Committee on Public Ethics (COPE). “They’re gradually realizing that it’s better to admit that it could happen and tell us what you’re doing about it, rather than to say, ‘It could never happen.’”

There are indications that bad practice — particularly at the less serious end of the scale — is rife. In 2009, Daniele Fanelli of the University of Edinburgh carried out a meta-analysis that pooled the results of 21 surveys of researchers who were asked whether they or their colleagues had fabricated or falsified research.

Publishing his results in the journal PLoS One, he found that an average of 1.97 percent of scientists admitted to having “fabricated, falsified or modified data or results at least once — a serious form of misconduct by any standard — and up to 33.7 percent admitted other questionable research practices. In surveys asking about the behavior of colleagues, admission rates were 14.12 percent for falsification, and up to 72 percent for other questionable research practices.”

A 2006 analysis of the images published in the Journal of Cell Biology found that about 1 percent had been deliberately falsified.

According to a report in the journal Nature, published retractions in scientific journals have increased around 1,200 percent over the past decade, even though the number of published papers had gone up by only 44 percent. About half of these retractions are suspected cases of misconduct.

Wager says these numbers make it difficult for a large, research-intensive university, which might employ thousands of researchers, to maintain the line that misconduct is vanishingly rare.

New tools, such as text-matching software, have also increased the detection rates of fraud and plagiarism. Journals routinely use these to check papers as they are submitted or undergoing peer review.

“Just the fact that the software is out there and there are people who can look at stuff, that has really alerted the world to the fact that plagiarism and redundant publication are probably way more common than we realized,” Wager said. “That probably explains, to a big extent, this increase we’ve seen in retractions.”

Ferric Fang, a professor at the University of Washington School of Medicine and editor-in-chief of the journal Infection and Immunity, thinks increased scrutiny is not the only factor and that the rate of retractions is indicative of some deeper problem.

He was alerted to concerns about the work of a Japanese scientist who had published in his journal. A reviewer for another journal noticed that Naoki Mori of the University of the Ryukyus in Japan had duplicated images in some of his papers and had given them different labels, as if they represented different measurements. An investigation revealed evidence of widespread data manipulation and this led Fang to retract six of Mori’s papers from his journal. Other journals followed suit.

The refrain from many scientists is that the scientific method is meant to be self-correcting. Bad results, corrupt data or fraud will get found out — either when they cannot be replicated or when they are proved incorrect in subsequent studies — and public retractions are a sign of strength.

That works up to a point, Fang said.

“It ended up that there were 31 papers from the [Mori] laboratory that were retracted, many of those papers had been in the literature for five to 10 years,” he said. “I realized that ‘scientific literature is self-correcting’ is a little bit simplistic. These papers had been read many times, downloaded, cited and reviewed by peers and it was just by the chance observation by a very attentive reviewer that opened this whole case of serious misconduct.”

Extraordinary claims will elicit lots of attention. However, cases such as Mori’s — where work is flawed and falsified, but the results are not particularly surprising — misconduct is difficult to detect.

In psychology research, there is a particular problem with researchers who selectively publish some of their experiments to guarantee a positive result.

“Let’s say you have this theory that, when you play Mozart, people want to pay more for musical instruments,” Simonsohn said. “So you do a study and you play Mozart [or not] and you ask people, ‘How much would you pay for a piano or flute and five instruments?’”

If it turned out that only the price of a single type of instrument, violins, say, went up after people had listened to Mozart, it would be possible to publish a paper that omitted the fact that the researchers had asked about other instruments. This would not allow the reader to make a proper assessment of the strength of the effect that Mozart may (or may not) have on how much a person would pay for instruments.

Fanelli has examined this positive result bias. He looked at 4,600 studies across all disciplines between 1990 and 2007 and counted the number of papers that, after declaring an intent to test a particular hypothesis, reported a positive support for it. The overall frequency of positive supports had grown by more than 22 percent over this time period. In a separate study, Fanelli found that “the odds of reporting a positive result were around five times higher among papers in the disciplines of psychology and psychiatry and economics and business compared with space science.”

This issue is exacerbated in psychological research by the “file-drawer” problem, a situation when scientists who try to replicate and confirm previous studies find it difficult to get their research published. Scientific journals want to highlight novel, often surprising, findings. Negative results are unattractive to journal editors and lie in the bottom of researchers’ filing cabinets, destined never to see the light of day.

“We have a culture which values novelty above all else, neophilia really, and that creates a strong publication bias,” Chambers said. “To get into a good journal, you have to be publishing something novel, it helps if it’s counter-intuitive and it also has to be a positive finding. You put those things together and you create a dangerous problem for the field.”

When Daryl Bem, a psychologist at Cornell University in New York, published sensational findings last year that seemed to show evidence for psychic effects in people, many scientists were unsurprisingly skeptical. However, when psychologists later tried to publish their [failed] attempts to replicate Bem’s work, they found journals refused to give them space. After repeated attempts elsewhere, a team of psychologists led by Chris French at Goldsmith’s, University of London, eventually placed their negative results in the journal PLoS One this year.

There is no suggestion of misconduct in Bem’s research, but the lack of an avenue in which to publish failed attempts at replication suggests self-correction can be compromised and people such as Smeesters and Stapel can remain undetected for a long time.

“Usually there is no official mechanism for a whistleblower to take if they suspect fraud,” Chambers said. “You often hear of cases where junior members of a department, such as PhD students, will be the ones that are closest to the coalface and will be the ones to identify suspicious cases. But what kind of support do they have?”

Fang is aware that his spotlight on misconduct has the potential to show up scientists in a disproportionately bad light — as yet another public institution that cannot be trusted beyond its own self-interest. Yet he says staying quiet about the issue is not an option.

“Science has the potential to address some of the most important problems in society and for that to happen, scientists have to be trusted by society and they have to be able to trust each others’ work,” Fang said. “If we are seen as just another special interest group that are doing whatever it takes to advance our careers and that the work is not necessarily reliable, it’s tremendously damaging for all of society because we need to be able to rely on science.”

Getting it wrong

The South Korean scientist Hwang Woo-suk rose to international acclaim in 2004 when he announced, in the journal Science, that he had extracted stem cells from cloned human embryos.

The following year, Hwang published results showing he had made stem cell lines from the skin of patients — a technique that could help create personalized cures for people with degenerative diseases. However, by 2006, Hwang’s career was in tatters when it emerged that he had fabricated material for his research papers. Seoul National University sacked him and, after an investigation in 2009, he was also convicted of embezzling research funds.

Around the same time, a Norwegian researcher, Jon Sudbo, admitted to fabricating and falsifying data. Over many years of malpractice, he perpetrated one of the biggest scientific frauds ever carried out by a single researcher — the fabrication of an entire 900-patient study, which was published in the Lancet in 2005.

Marc Hauser, a psychologist at Harvard University whose research interests included cognition in non-human primates, resigned in August last year after a three-year investigation by his institution found he was responsible for eight counts of scientific misconduct. The alarm was raised by some of his students, who disagreed with Hauser’s interpretations of experiments that involved the somewhat subjective procedure of working out a monkey’s thoughts based on its response to some sight or sound.

Within Taiwan’s education system exists a long-standing and deep-rooted culture of falsification. In the past month, a large number of “ghost signatures” — signatures using the names of deceased people — appeared on recall petitions submitted by the Chinese Nationalist Party (KMT) against Democratic Progressive Party legislators Rosalia Wu (吳思瑤) and Wu Pei-yi (吳沛憶). An investigation revealed a high degree of overlap between the deceased signatories and the KMT’s membership roster. It also showed that documents had been forged. However, that culture of cheating and fabrication did not just appear out of thin air — it is linked to the

On April 19, former president Chen Shui-bian (陳水扁) gave a public speech, his first in about 17 years. During the address at the Ketagalan Institute in Taipei, Chen’s words were vague and his tone was sour. He said that democracy should not be used as an echo chamber for a single politician, that people must be tolerant of other views, that the president should not act as a dictator and that the judiciary should not get involved in politics. He then went on to say that others with different opinions should not be criticized as “XX fellow travelers,” in reference to

Taiwan People’s Party Legislator-at-large Liu Shu-pin (劉書彬) asked Premier Cho Jung-tai (卓榮泰) a question on Tuesday last week about President William Lai’s (賴清德) decision in March to officially define the People’s Republic of China (PRC), as governed by the Chinese Communist Party (CCP), as a foreign hostile force. Liu objected to Lai’s decision on two grounds. First, procedurally, suggesting that Lai did not have the right to unilaterally make that decision, and that Cho should have consulted with the Executive Yuan before he endorsed it. Second, Liu objected over national security concerns, saying that the CCP and Chinese President Xi

China’s partnership with Pakistan has long served as a key instrument in Beijing’s efforts to unsettle India. While official narratives frame the two nations’ alliance as one of economic cooperation and regional stability, the underlying strategy suggests a deliberate attempt to check India’s rise through military, economic and diplomatic maneuvering. China’s growing influence in Pakistan is deeply intertwined with its own global ambitions. The China-Pakistan Economic Corridor (CPEC), a flagship project of the Belt and Road Initiative, offers China direct access to the Arabian Sea, bypassing potentially vulnerable trade routes. For Pakistan, these investments provide critical infrastructure, yet they also